This post was developed in order to give a longer response to Henrik Ebbeskog’s.

https://twitter.com/henebb/status/896404981003296768

My personal response to this tweet is that it represents a one-sided static and unsophisticated view of what a CEO may want. I am going to use this question as launch point to show that a range is possible. Which one may be ‘correct’ is highly dependent on the CEO’s mental models (and motivations), the organization, and the environment in which the organization finds itself. In this post, I am only trying to disprove the hypothesis that the CEO must understand some estimate from a traditional point of view at the beginning of an initiative. I’m also going to explore this from a financial sense, not so much from what a team may do around story points, et cetera, though I will make a short mention of it at the end.

For context that we can work within, the scenario is a SaaS company that provides financial compliance services. The company already has revenues in the high tens of millions of dollars and thus is not a start-up. The CEO is interested potentially expanding into a new market by launching new product services in helping clients monitor environmental compliance.

A Traditional Estimation Mindset

If the CEO is in a traditional estimation mindset, she or he will be interested in knowing as much about the iron triangle’s values of cost, time, and scope as possible. The CEO will turn to marketing (the Chief Marketing Officer if they have one) and ask of them “what are all the environmental compliance monitoring needs, who are our potential customers, and what is the potential revenue for these services?” Before marketing runs off and does this research, the CEO also asks, “how long will it take you to research these, and how much will this research will cost?” These are of course fair enough questions; the CEO wants to know the potential cost of the information before giving a go-ahead and whether it can be done in a reasonable timeframe.

OK, an estimate is made on cost and time (hopefully using historic data if they have it) the answer sounds reasonable to the CEO, so the green light to proceed is given. So marketing proceeds with the work they do in order to understand the market space taking about one quarter to do so at roughly $150K; this is on schedule and on budget from the estimate they gave the CEO (1 quarter and $150K+/-$10K; woot! win!). They may research the web on compliance needs, survey companies, see if competitors exist, et cetera. It looks promising; the revenue looks like it will be $10M for the first year, $20M the second year, and an estimated $30M the third year.

Now the CEO turns to the Chief Technical Officer asking, “how long will it take you to build this and when will it be done?” as he hands marketing’s finding on scope to him. Of course the CTO doesn’t give her or him a flippant answer, so the CTO goes back and pulls together a cross-functional team, including an experienced product manager, (let’s assume they have been using Scrum/XP practices for years) and this team defines an MVP with a rough price tag of $225K+/-$50K to get there. They also come up with an estimate of a first marketable release a quarter after that, and (in talking with marketing) another 2 subsequent improvement releases based on prioritized environmental monitoring needs the next two quarters after that for a total cost of $900K+/-$200K. Cool beans! Let’s go!

They execute and for simplicities sake they stay true to their estimate of $900 and $225K per quarter. I want to state that once the team was pulled together, the cost over a time interval is known if it is a stable cross-functional team.

The mindset here is understanding risk before executing (and of course managing it during execution).

A Lean Start-up Mindset

The CEO is interested in exploring the same environmental compliance space. He talks with his other executives and they decide to form a cross-functional team of marketing, which includes an experienced product manager, and IT personnel. They pull together a hypothesis of customer, problem, and solution and identify a set of assumptions about this. The team and executives set a vision for the need and boundary constraints that ensure it stays aligned with the company’s core vision and mission. Within these constraints is a set of questions that if understood state criteria for a transition state to a development effort as it provides enough detail to define an MVP and MMP as well as what the revenue stream for the MMP and other potential known releases of the SaaS product will be. The CMO is appointed as the oversight on this effort and agree that after each assumption is tested he will review whether to proceed, pivot, or kill. It’s worth noting at this point, none of the iron triangle is known. Costs per week of this assigned team are known (just like AFTER marketing provided the estimate above and were told to execute their research).

The first assumption is that monitoring a particular environmental compliance aspect is unserved in the marketplace. The team tries to find evidence of this through market research and a survey to their financial service customers in the same space. It is not disproved, so the CMO gives a proceed (or in start-up terms persevere) signal. They test the next assumption, and then the next. Sometimes, they build a quick prototype to see if a particular compliance rule can be enabled (it was the riskiest assumption). At the end of a quarter and $150K they know what the MVP and MMP look like and what the next 2 releases look like. They have the same revenue stream predicted as the traditional team.

At this point the team is reconfigured to look like the Scrum/XP team above and proceed (however, no estimate is asked for) and we’ll say that they deliver just as the team above costing $900K. The important point is that the same stable cost over the time intervals operates as above. At each quarter a proceed or kill decision is made based on the throughput of work was completed to what remains. This is a form of estimation – yes I realize it. What is different in this case is when the estimate is made; I didn’t start with an estimate. A rougher approach is simply looking at the remaining backlog compared to what’s left. I could also evaluate the marketplace at this point using the competitive analysis approach I had done and see if I want to continue (basing a decision on expected value in terms of a change in potential revenue – another form of estimate).

Other Alternatives

I could choose to do a traditional approach to marketing and then build without the estimate on how much it will cost. I could reverse this and do a lean start-up approach to understanding the market demand and transition to an estimated approach as well.

One thing to note: the lean start-up approach could be modified so that once the MVP (or MMP) was defined, the company could actually start building knowing they have some revenue stream that will come in; they may not know whether the company will get to the revenue stream as predicted though. This would decrease time to market for the MMP and may allow it to even expand into the other markets or it could decide to not and become a complementary service companies purchase.

Take-Aways for the Reader

First, I painted a rosy picture of delivery. It is very likely delivery will not go as smoothly as this. Thus when I reach the end of a quarter where a release is defined, I need to decide whether to continue or not or release with what I have. In a traditional, estimated viewpoint, I am deciding whether to add more time to the schedule (and potentially run over budget as a result) to release with the fully expected scope or release with less scope on time and at budget. Regardless of whether I estimated the length of time it took me or not, I can use my actual throughput of work as the predictor or not on whether to continue. Again, as I mentioned before this is a form of estimation; I am just choosing to do it later.

Second, I didn’t get into estimation that may occur (or not) by the team.

The primary use of story points (or another team estimation method on stories) is to know whether a story is small enough to be completed easily within an iteration. Some teams get really good at understanding their sizing and can stop using story points. (Lunar Logic’s estimation cards are a good insight into this, every story is either a 1 – we can take it on, TFB – too f-ing big, or NFC – no f-ing clue.) I encourage teams to examine the story’s independence and testability to gain this understanding as these two parts of the INVEST criteria are what feed the complexity thinking one needs to understand in applying story points. Teams can still measure throughput and lead time as these can be useful for later questions when an estimate may be needed about ‘how much’ or ‘how long’.

Third, I’d like to change the convo a little a bit. I personally think value and cost are decoupled. Net value (value minus cost aka ROI) are coupled. A great place to get a sense of this is Reinventing Project Management by Shenhar and Dvir. In this book, the authors describe two scenarios where the cost of the project had nothing to do with the end value of what was produced. Another change is ridding ourselves of the use of project thinking when we are doing product efforts. Product life-cycles extend beyond initial delivery and when use project thinking we often short change understanding of both costs and value in the long-term, whether we estimate or not.

Fourth, I hope this gives some insight into when choices can be made about estimation; it is not a simple binary answer, but one of fidelity. In some cases, one will want to run several detailed simulations in order to understand whether an undertaking should be done. In other cases, maybe we can just get started with none what-so-ever. Humans actually never escape mental models of estimation however, even a zero on this range assumes we will get some learning insight that has value and that in itself is an intuitive estimate. We certainly discovered this at the first Agile Dialogues unconference. (Biggest personal disappointment at this unconference is that the person that helped shape the theme then chose not to come after indicating they would.) What the thinking in the #noestimates ‘movement’ is trying to do is change the nature of this and question what our assumptions and beliefs are about what and when to estimate.

I’ll close with saying that there are people that add well to this conversation – they bring in well-formulated opinions. There are others that prefer to provoke – this occurs on both sides unfortunately. I personally seek actual dialogue so we can get out of binary thinking on this (see the Agile Bramble). I point out circumstances where not estimating work not to debate that not estimating is the path to follow, I’ve never said ‘never estimate’, but to have more dialogue of when we should undertake it or not and what we should estimate. Notice I didn’t say ‘if’. I’ve had someone state I evidently had no evidence when I have given some. I’m also not interested in endless debate – ask yourself do you feel you need to ‘win’ an argument. If so, you are not in a mindset for dialogue or learning, but to prove a point.

With this, I hope I have shown that the Rule of 3 applies 🙂

So I investigated how these normally got funded; any estimate done is simply reported up the chain (as requested Development monies), but the funds are actually provided by the programs that need the work done for them. These are used as a projection for the branch and nothing more. Any work really done goes through its own process of requesting and then actual money is provided.

So I investigated how these normally got funded; any estimate done is simply reported up the chain (as requested Development monies), but the funds are actually provided by the programs that need the work done for them. These are used as a projection for the branch and nothing more. Any work really done goes through its own process of requesting and then actual money is provided. There are many change management approaches out there. Most focus on weaknesses you need to change; several others out there focus more on things to keep the same and build upon. Most change agents then further target using one approach or another, perhaps based on context, or perhaps as a ‘goto’ tool (you know what they say about goto statements – don’t use them!).

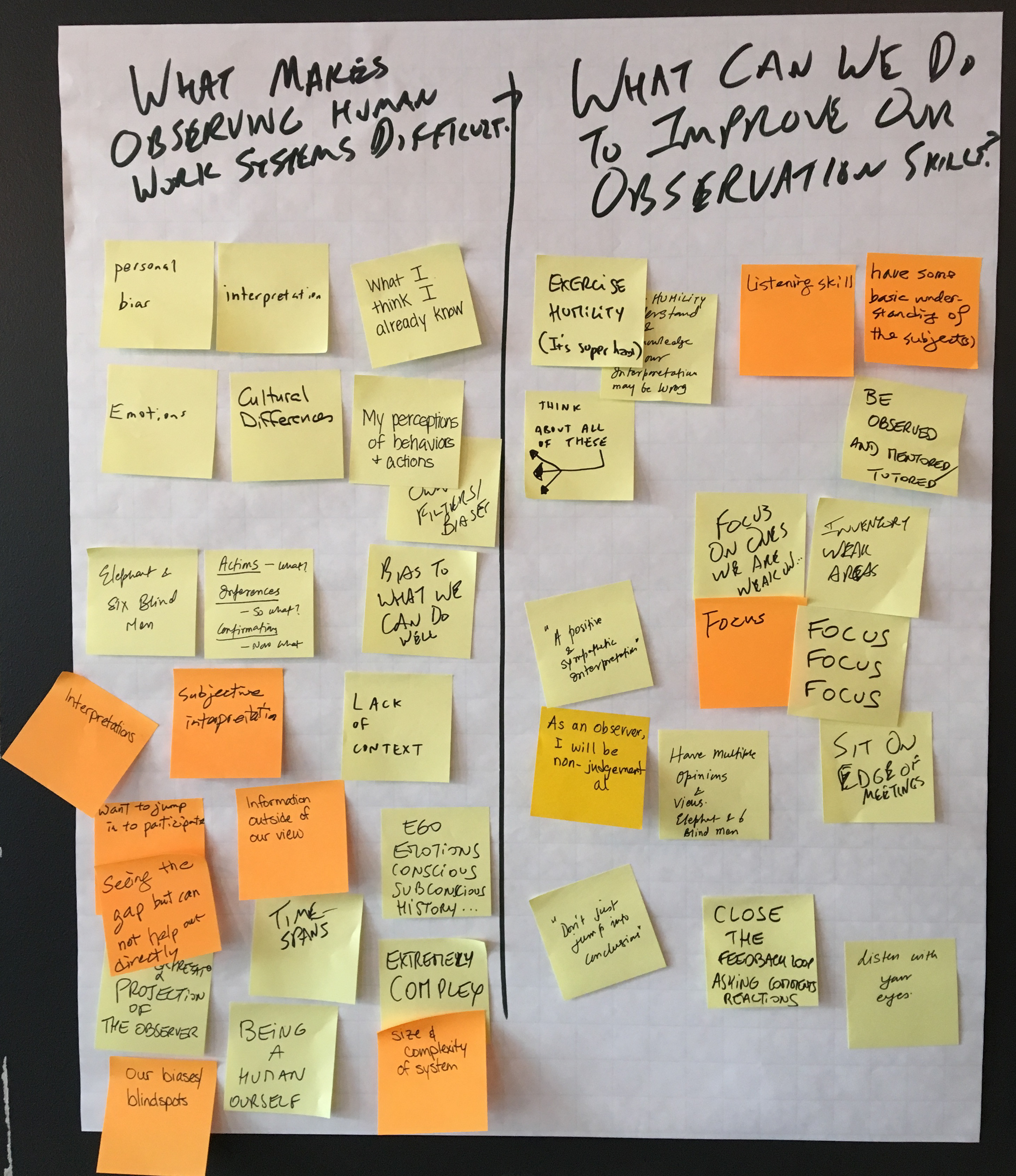

There are many change management approaches out there. Most focus on weaknesses you need to change; several others out there focus more on things to keep the same and build upon. Most change agents then further target using one approach or another, perhaps based on context, or perhaps as a ‘goto’ tool (you know what they say about goto statements – don’t use them!). Yet only applying strengths does not help you eliminate weaknesses. When talking about change such as what may occur in an Agile Transformation, another approach to look at is the

Yet only applying strengths does not help you eliminate weaknesses. When talking about change such as what may occur in an Agile Transformation, another approach to look at is the