First let’s ensure how I am looking at portfolio management is understood. I’ll use this fairly common definition: it’s the oversight of a set of investments being made for meeting a set of objectives. Since we’re dealing with a set of investments, there are a few lenses that we can apply to view them. This post will take a look at the first of a few lenses we can use to develop suitable metrics.

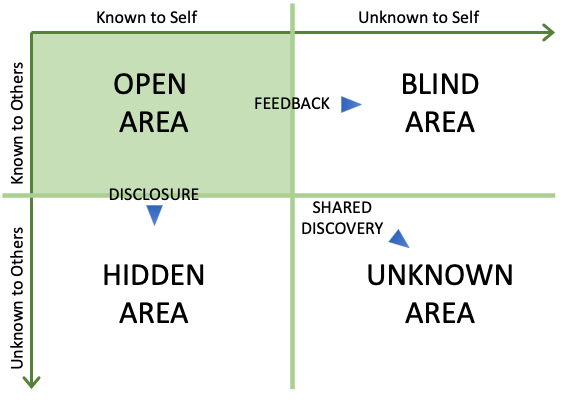

The first lens is what I’ll call the SEE lens. It stands for sustainability, effectiveness, and efficiency. Just to give you an understanding of where future posts will go, I’ll review the other lenses.

The second lens is one of where an investment is in its life-cycle; is it a new product or service, one that is being matured (i.e. trying to be grown), is it mature, or is it one that the organization is retiring? The third lens I’ll call a spatial lens and is tied to either market segments or geography. You could apply both, but I’d be careful to ensure that the delineation is needed. BTW, geography doesn’t have to be what we generally think of regions, countries, et cetera. It may be more appropriate in some cases to look at this by a slicing of rural, urban, and suburban or maybe along climates; it will depend on the investment. A fourth lens may be around the technology or skills used. Again, in this post, I am only going to deal with the SEE lens. In future posts, I’ll focus on other lenses.

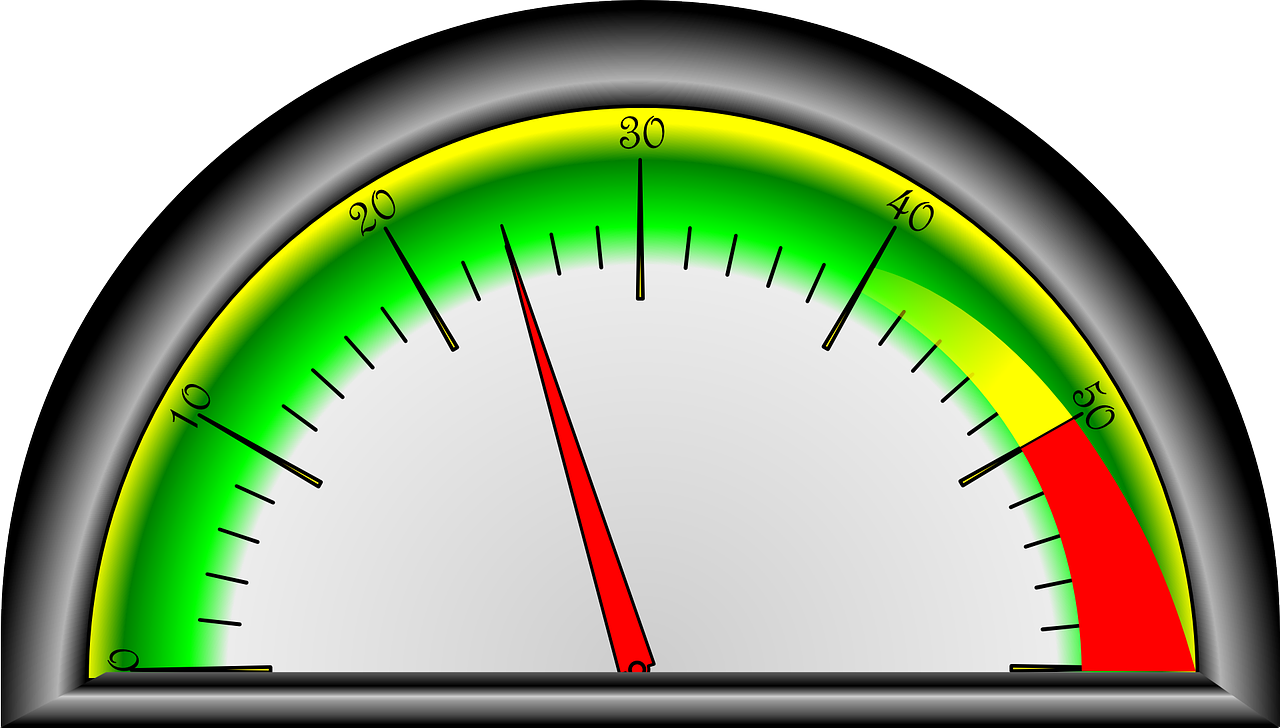

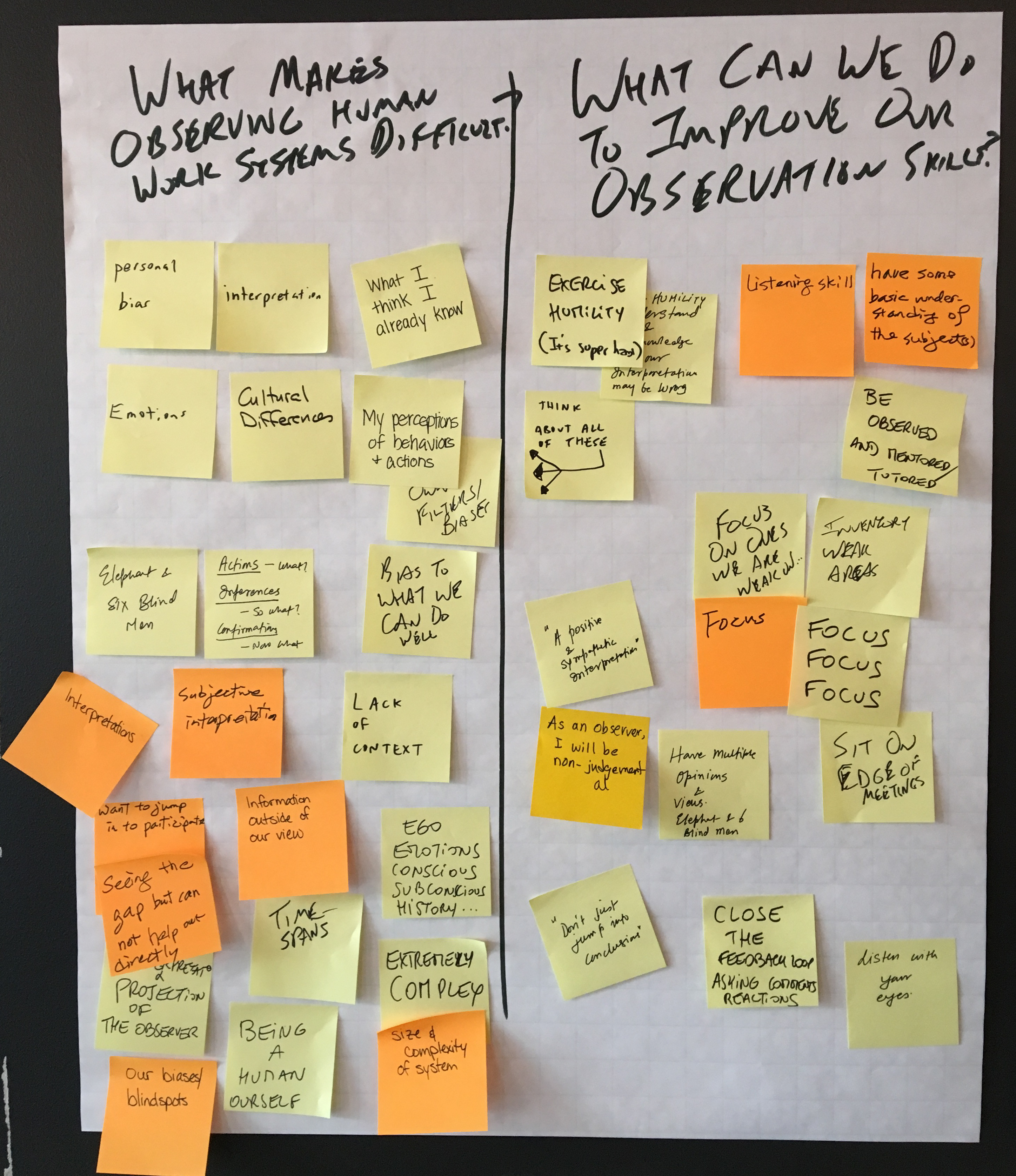

The sustainability lens is how well can I keep this investment going. This is probably your most important lens as it the other lens assume some level of sustainment. Useful metrics are around employee morale and customer relationships. You might measure employee morale with job satisfaction surveys or happiness indices. You can also look at overtime as an indicator (especially if the job is salaried, not hourly wage).

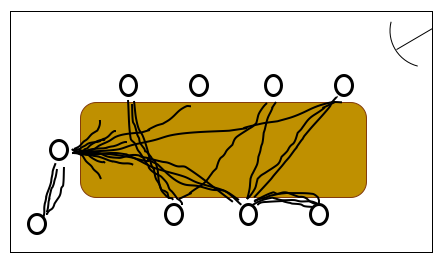

For knowledge work, I would suggest setting up measurements in how people feel they have meaning within the work they do, feel responsible for the outcomes their work has, and the ability to know the results of their work. These are the three critical psychological states as defined by Hackman and Oldman. If any of these start to dip, we have some indicators in what we can do to help get them back on track by looking at how jobs are designed. We can also gather metrics on factors that contribute to group effectiveness from the organizational context or interpersonal processes.

For customer satisfaction, we can looks at a net promoter score (NPS) as one easy to gather metric; the issue is that it may not reveal the exact nature of any problem if it slips. Customer referrals would be the realization of a high NPS; the organization is actually getting others recommending them, not just saying they will. Another metric around renewals or repeat sales also can help measure this satisfaction. Lastly, you could look at the inverse of satisfaction, the level of complaints leveled as another metric.

The sustainability lens metrics lead effectiveness and efficiency metrics. When these slip in undesirable directions, you will get problems appearing later in the others.

Our second lens is the effectiveness lens. It is is second in importance after sustainability. Why? Well without sustainment, your effectiveness becomes irrelevant and being efficient is meaningless if you aren’t being effective.

Effectiveness is highly dependent on the mission of the organization. For commercial companies, sales, revenue, and market are applicable to this lens. I would suggest that finding a metric that indicates alignment in market fit would also be beneficial. For a services organization, this might be how well are you doing to solve a customer’s problem or are you providing the right talent for helping the customer perform their mission. Product companies usually focus on their position in the market, finding ways to measure this based on product features. For non-profit or public institutions, this will be totally aligned with what your organization is chartered to do.

One thing to be careful is doing comparisons with other products or services as your means of measuring. That will only tell you how effective you are in relative terms, not in a concrete manner. It’s not that comparisons aren’t useful, but let’s say your market fit is how well you can transport people between different points at a specific cost. If you only used comparisons to others that did this in a similar manner, you might then find that you can’t see a potential disruptive approach to this because of the comparative view. If you used a more concrete metric that didn’t rely on comparisons, then you can look for ways yourself to improve this metric irregardless of competition.

Some product quality metrics also are within the effectiveness lens. Market fit is a form of quality metric. Other factors might be reliability, usability, or security; knowing which are applicable to your product or service can help you find the right metrics to measure in these.

Efficiency is the last lens. Metrics you may use within this lens are profit margins, time to market, wastes in production, production costs, or labor time. If the organization services the products it produces, finding a quality metric around maintainability like mean time to repair may fit here as well.

I hope this gives you a start in how you can start looking for metrics that can help your organization.